实验室博士生张钊作为第一作者,孙光辉教授作为通讯作者的论文“DFTI: Dual-branch Fusion Network based on Transformer and Inception for Space Non-cooperative Objects”已经被国际期刊IEEE Transactions on Instrumentation & Measurement录用。

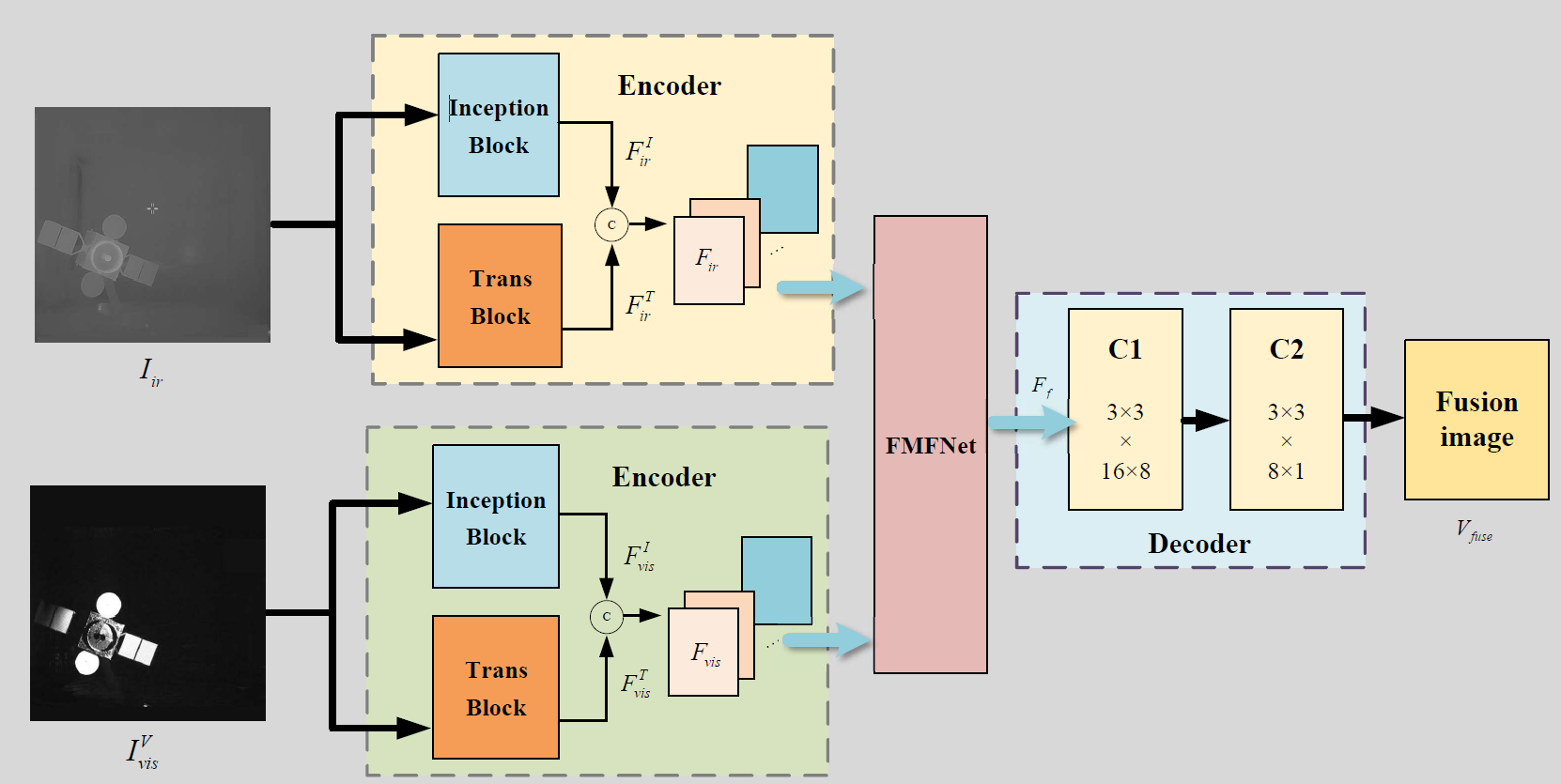

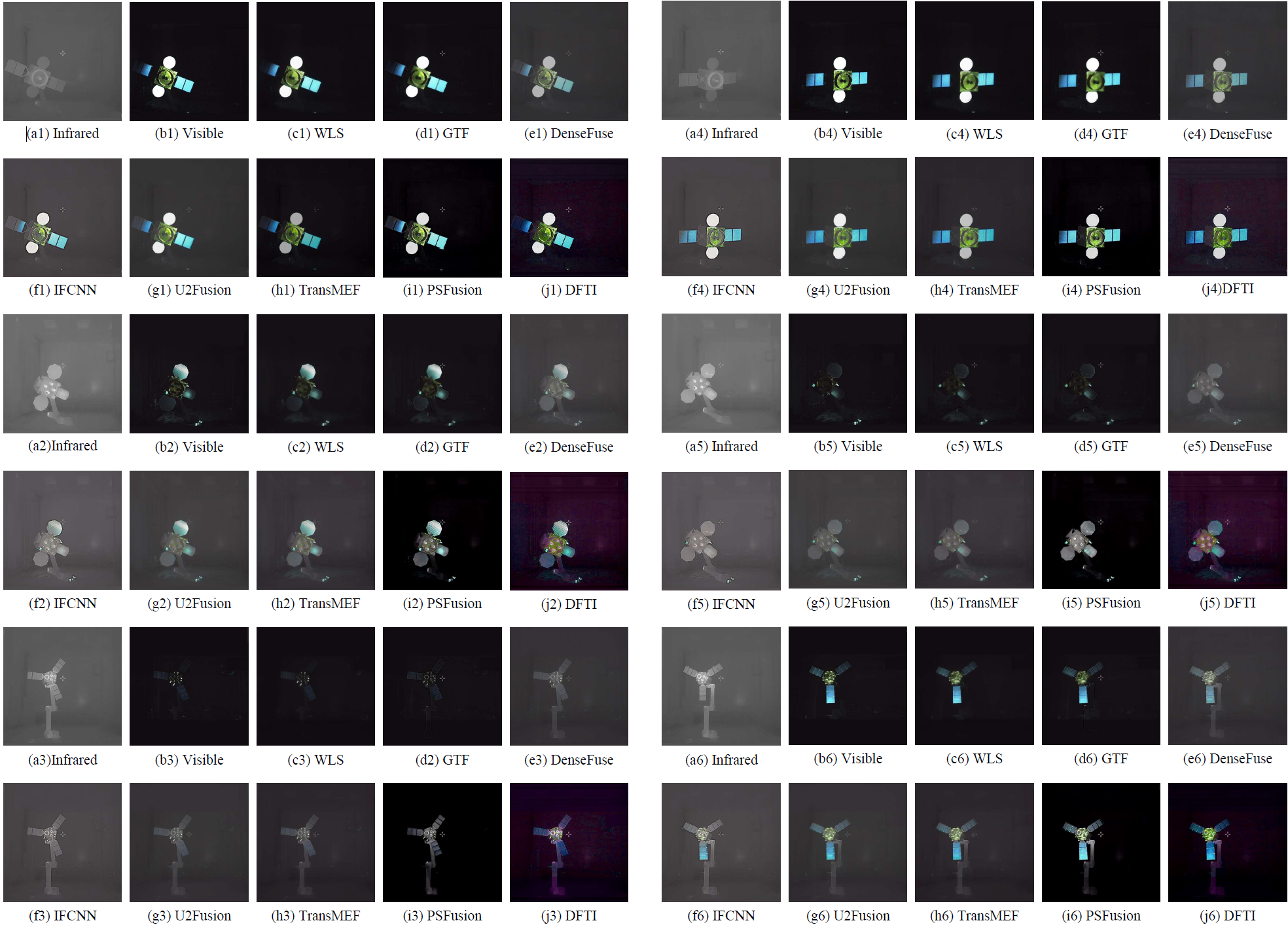

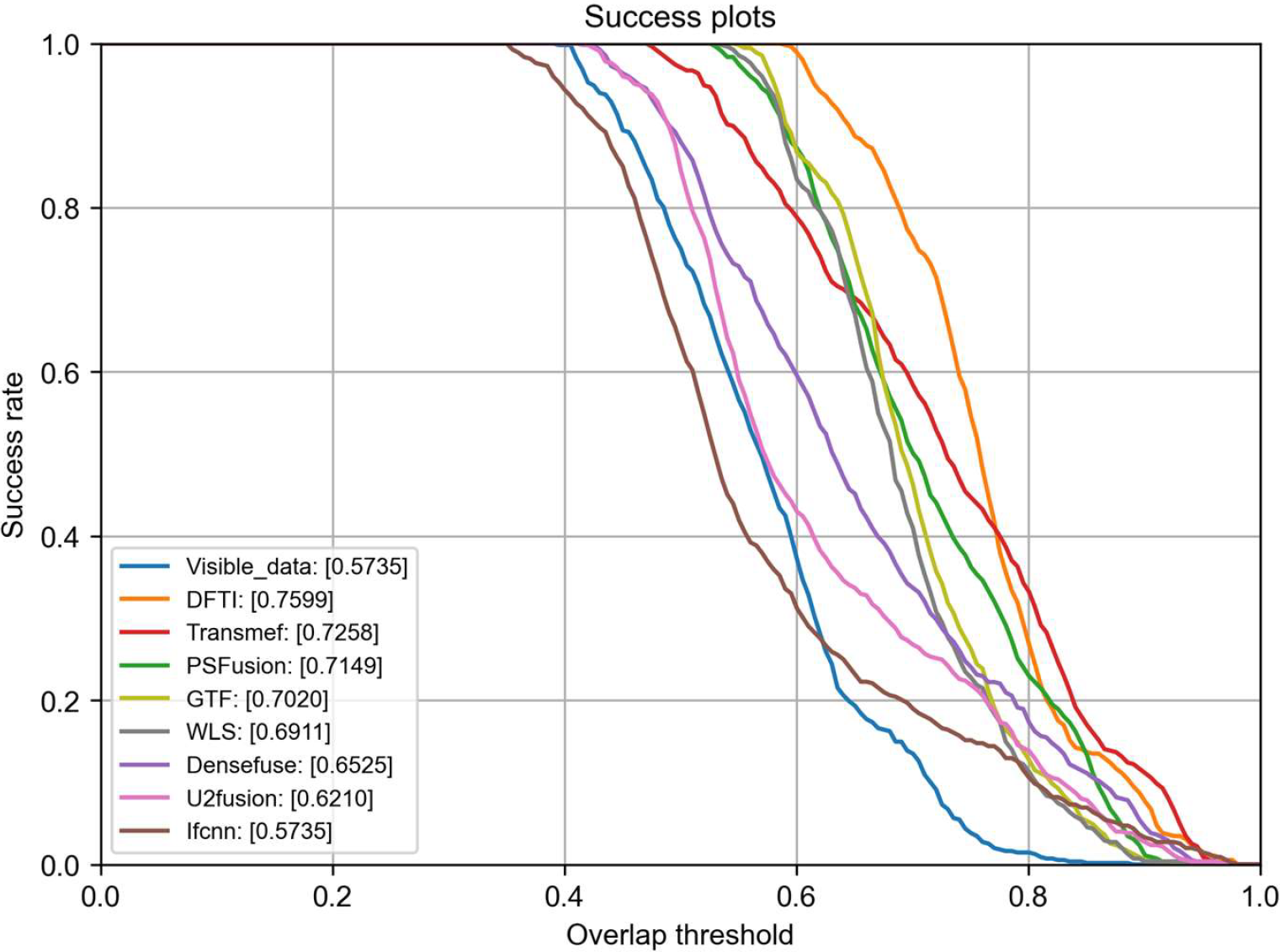

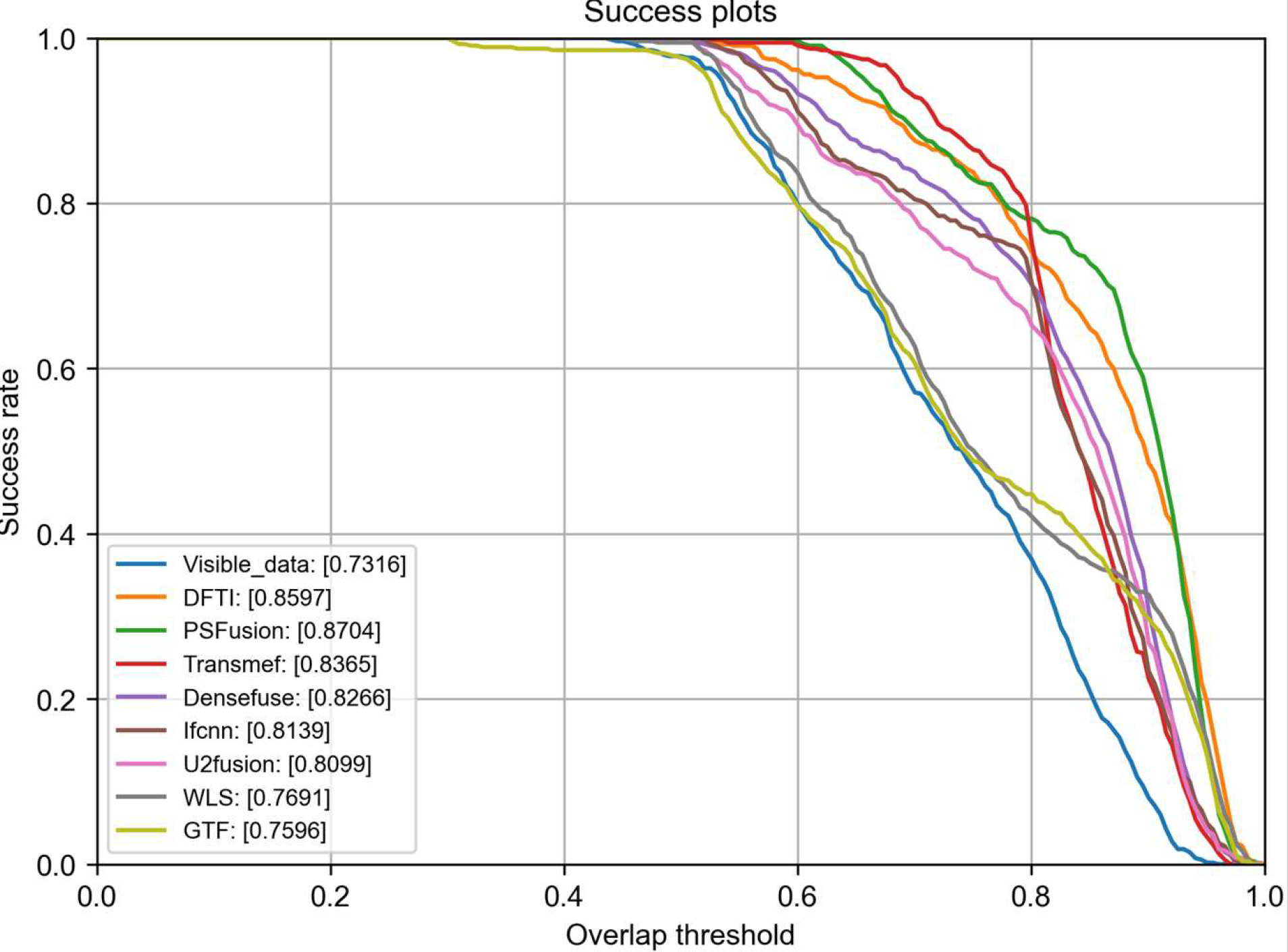

由于太空环境下光照条件恶劣,因此基于多源图像融合的空间非合作目标感知对在轨维护和轨道碎片清除至关重要。该论文首先设计了一种结合Transformer和Inception模块的双支路多尺度特征提取编码器来提取可见光和红外图像的全局和局部特征并建立高维语义连接,随后提出了一种交叉卷积特征融合的特征融合模块,从而最终构建了基于自编码器结构和无监督学习的空间非合作目标双分支融合网络,融合后的图像可以同时保留空间非合作目标的颜色、纹理细节和轮廓能量信息。该论文首次提出了空间非合作目标(FIV-SNO)的红外和可见光图像融合数据集,并将目标跟踪作为后续的高级视觉任务,实验结果表明该方法在主观视觉感受和客观定性评价方面都表现出优异的性能。

Abstract

Due to the adverse illumination in space, non-cooperative object perception based on multi-source image fusion is crucial for on-orbit maintenance and orbital debris removal. In this paper, we first propose a dual-branch multi-scale feature extraction encoder combining transformer block and Inception block to extract global and local features of visible and infrared images and establish high-dimensional semantic connections. Secondly, different from the traditional artificial design Fusion strategy, we propose a Feature Fusion Module called Cross-Convolution Feature Fusion (CCFF) Module, which can achieve image feature level fusion. Based on the above, we propose a Dual branch Fusion Network based on Transformer and Inception (DFTI) for space noncooperative object, which is an image fusion framework based on auto-encoder architecture and unsupervised learning. The fusion image can simultaneously retain the color texture details and contour energy information of space non-cooperative objects. Finally, we construct a fusion dataset of infrared and visible images for space non-cooperative objects (FIV-SNO) and compare DFTI with seven state-of-the-art methods. In addition, object tracking as a follow-up high-level visual task proves the effectiveness of our method. The experimental results demonstrate that compared with other advanced methods, the Entropy (EN) and average gradient (AG) of the fusing images using DFTI network are increased by 0.11 and 0.06 respectively. Our method exhibits excellent performance in both quantitative measures and qualitative evaluation.