近期,实验室博士生张权麒作为第一作者,实验室教师吴承伟作为通讯作者的论文“Safety Reinforcement Learning Control via Transfer Learning”已被控制领域权威期刊Automatica录用。

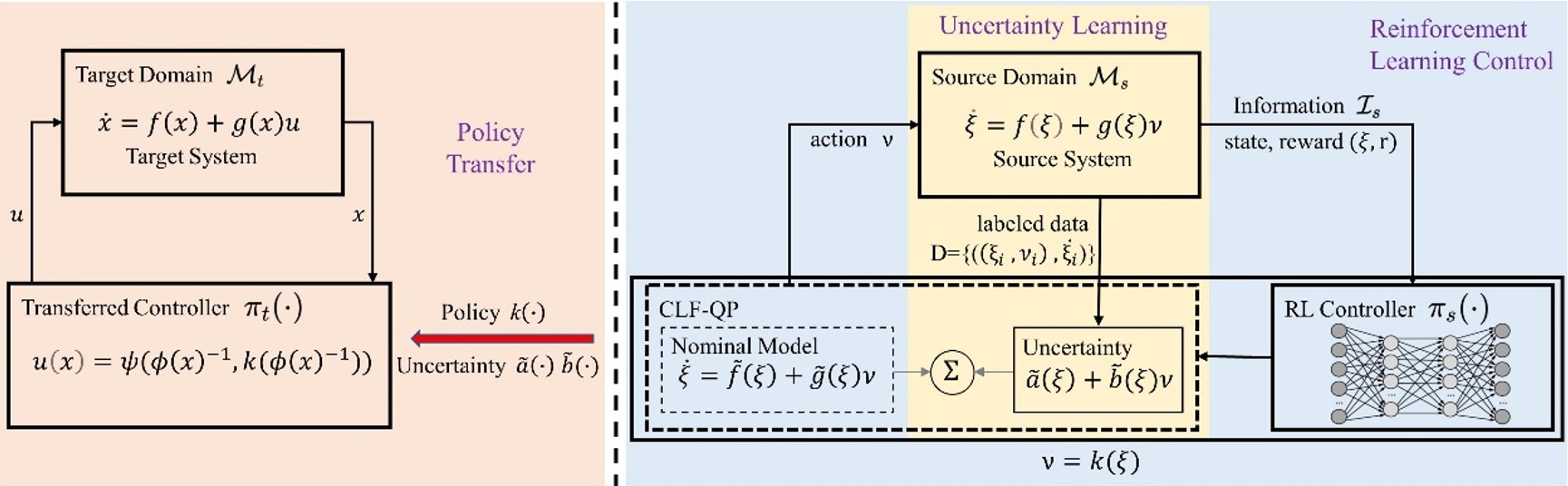

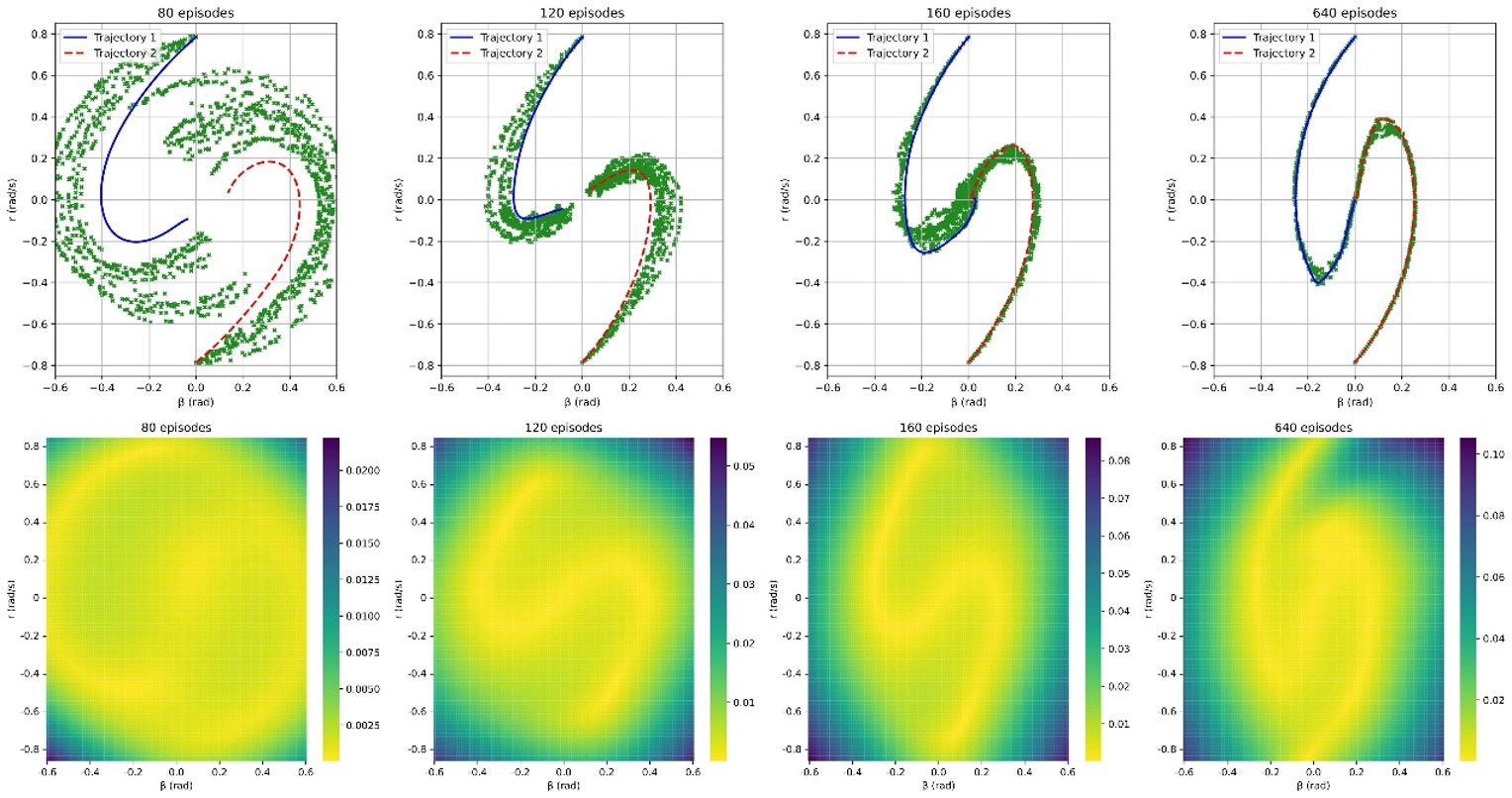

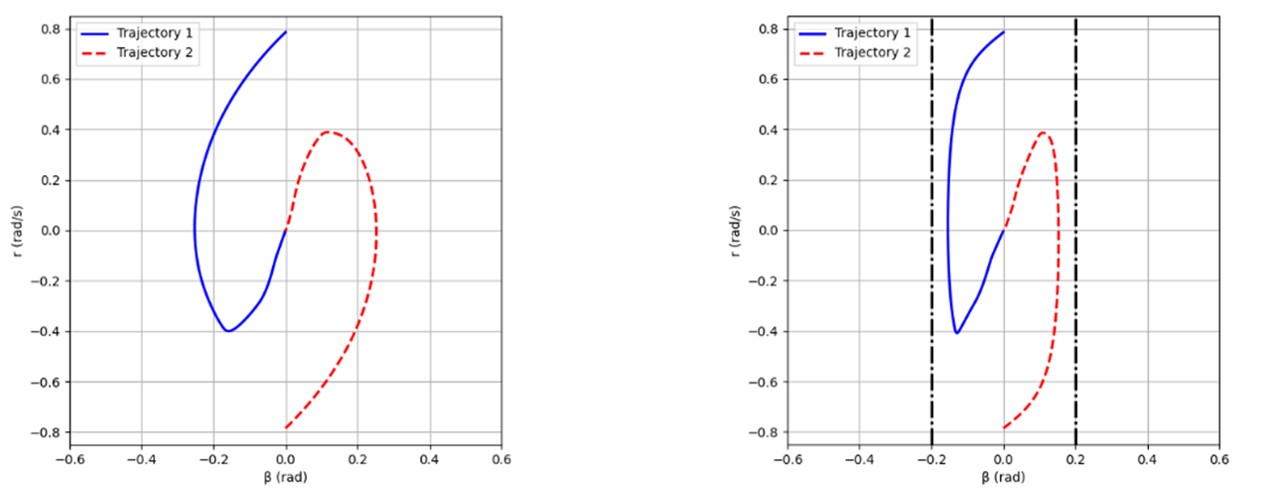

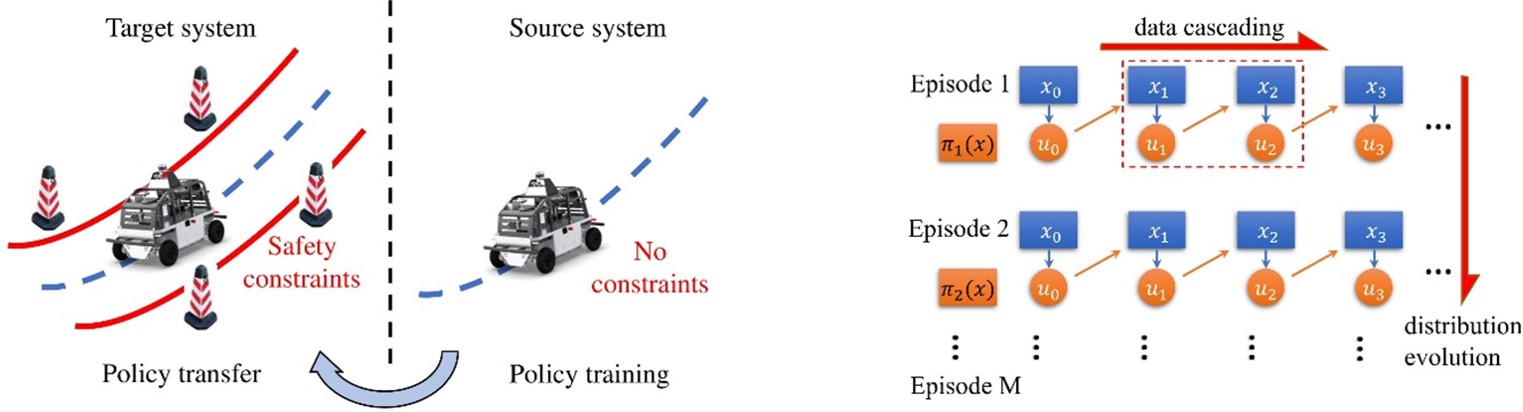

强化学习对于现代控制系统来说是一种有前景的控制方法。然而,由于探索与试错机制缺乏安全保证,使其在现实应用中受到了限制。针对这个问题,该论文提出了一种新颖的迁移学习框架,该框架使学习者在非危险环境中进行策略训练,随后将训练后的策略迁移到原有的危险环境中。该论文从理论上证明了迁移策略能够保证原系统的安全性和稳定性。此外,针对策略迁移对精确模型这一需求,该论文还提出了一种在强化学习算法框架下的不确定性学习算法,用于在训练策略的同时获取精确模型。该论文所提出的迁移学习框架避免了学习者在不安全环境中的探索,从根本上解决了强化学习控制在训练过程中的不安全问题。最后,通过车辆横向安全稳定性控制仿真验证了本文方法的有效性和优势。

Abstract

Reinforcement learning (RL) has emerged as a promising approach for modern control systems. However, its success in real-world applications has been limited due to the lack of safety guarantees. To address this issue, the authors present a novel transfer learning framework that facilitates policy training in a non-dangerous environment, followed by transfer of the trained policy to the original dangerous environment. The transferred policy is theoretically proven to stabilize the original system while maintaining safety. Additionally, we propose an uncertainty learning algorithm incorporated in RL that overcomes natural data cascading and data evolution problems in RL to enhance learning accuracy. The transfer learning framework avoids trial-and-error in unsafe environments, ensuring not only after-learning safety but, more importantly, addressing the challenging problem of safe exploration during learning. Simulation results demonstrate the promise of the transfer learning framework for RL safety control on the task of vehicle lateral stability control with safety constraints.